Day Twelve

The lego-sorter project that got me started on my deep learning project posted an update today! Remember, it was this section in the lego-sorter software breakdown where I discovered the Practical Deep Learning course:

And then several things happened in a very short time: about two months ago HN user greenpizza13 pointed me at Keras, rather than going the long way around and using TensorFlow directly (and Anaconda actually does save you from having to build TensorFlow). And this in turn led me to Jeremy Howard and Rachel Thomas’ excellent starter course on machine learning.

Within hours (yes, you read that well) I had surpassed all of the results that I had managed to painfully scrounge together feature-by-feature over the preceding months, and within several days I had the sorter working in real time for the first time with more than a few classes of parts. To really appreciate this a bit more: approximately 2000 lines of feature detection code, another 2000 or so of tests and glue was replaced by less than 200 lines of (quite readable) Keras code, both training and inference.

Today's update is mostly about the categories of sorted Lego (and an attempt to find buyers for the pieces). Mattheij briefly mentions the effect of the growing size of the training set on recognition accuracy (16k to 60k images and hitting diminishing returns) and the classifier used (RESNET50), but I mentioned the article mainly for its throwback value, not its deep learning insights.

Linear and nonlinear

Yesterday, we implemented a neural network in a spreadsheet. Sort of.

Wait, sort of? Did I lie to you? I might have. Somebody later told me that a neural network that consists only of linear functions isn't a real neural network. Maybe that person was just gatekeeping, but I looked up the definition of a linear function (any function where the graph of inputs and outputs form a straight line) and matrix multiplication seems to fit that description, and it also seems like most neural networks include a nonlinear activation function (a function that acts on the activations produced by multiplying our inputs and weights), which makes sense because if all you have is linear functions in your model, what you really have is a linear model, and if we want our network to be truly flexible we would probably want it to be able to handle inputs and outputs that don't map onto a straight line, so maybe that person was on to something.

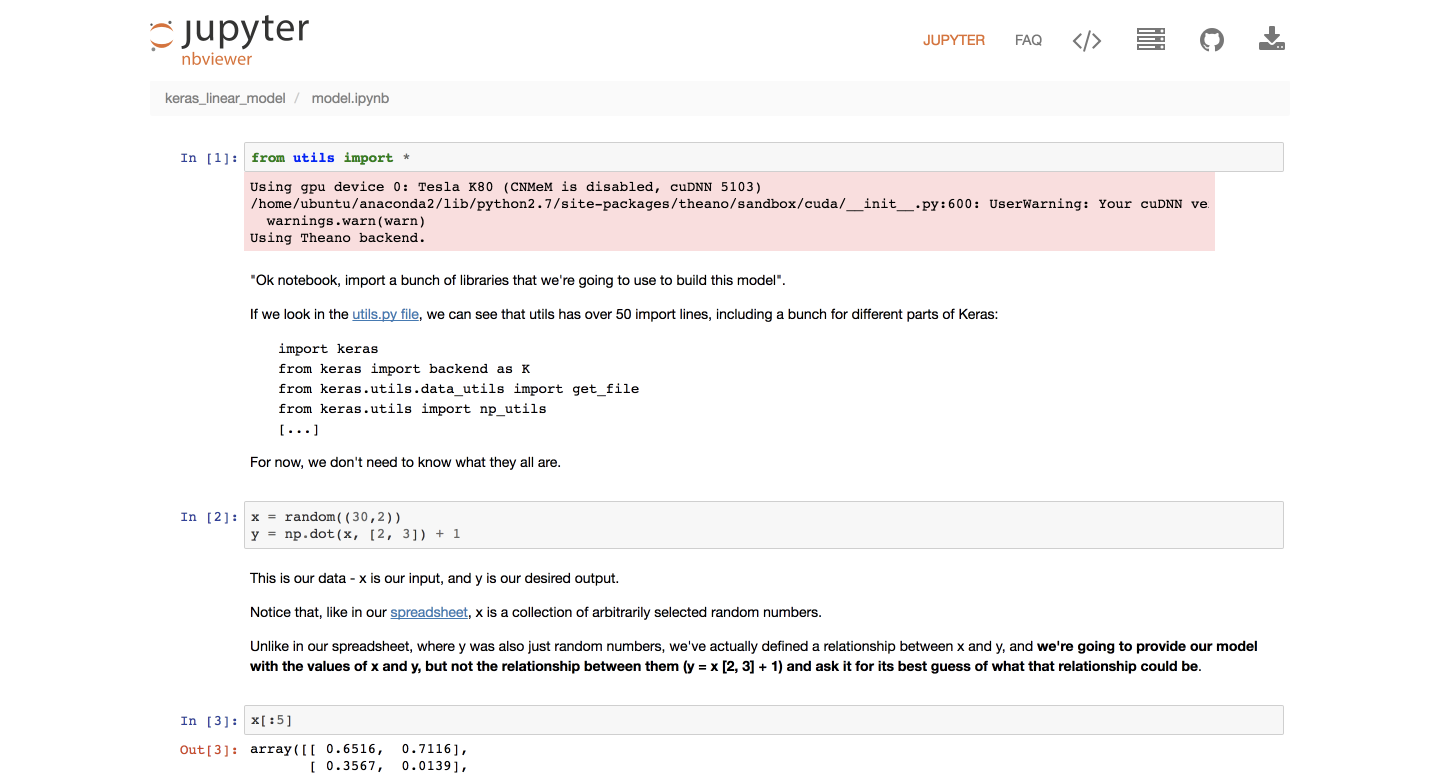

Keeping in mind that we'll want to be include nonlinear functions alongside our linear functions in the future, what we're going to do today is take that basic neural network from yesterday and implement it in code instead of a spreadsheet.

To do that, we're going to use Keras.

Keras

The last time I attempted to explain Keras was on Day Three:

The VGG models sit on top of Keras, a library that makes it easier to talk to neural networks. Keras sits of top of Theano, a library that takes Python code and turns it into code that can interact with the GPU via CUDA (the nVidia programming environment) and cuDNN (the CUDA deep neural network library).

"A library that makes it easier to talk to neural networks" is still a pretty good description of Keras for our purposes, so we're going to stick with that for now.

Notebook

I put the rest of this post (and the linear model I built) in the notebook here. I might come back and try to embed it in this post at some point, but I really don't want to deal with the formatting right now, and I think it makes more sense in the original notebook.