Day Fifteen

So I actually have been working on deep learning every day since my last update - I wrote the first version of the code that would become this post on Thursday, but it took a really long time to get to a point where I could explain most of it even somewhat coherently.

Let's go back to the VGG model we first encountered way back on Day Three.

from vgg16 import Vgg16

batch_size = 64

vgg = Vgg16()

batch_training = vgg.get_batches("/data/train/", batch_size=batch_size)

batch_valid = vgg.get_batches("/data/valid/", batch_size=batch_size)

vgg.finetune(batch_training)

vgg.fit(batch_training, batch_valid, nb_epoch=1)We should now be able to start making sense of most of this, even if we don't know Python.

from vgg16 import Vgg16Import the VGG-16 model that lives in the Python file vgg16.py, which should be sitting in the same directory as our Python script.

batch_size = 64Declare a variable called batch_size and give it the value 64. Whenever we refer to our batch_size variable in the future, we'll get back the number 64.

vgg = Vgg16()Declare a variable called vgg and give it a copy of the VGG-16 model.

batch_training = vgg.get_batches("/data/train/", batch_size=batch_size)

batch_valid = vgg.get_batches("/data/valid/", batch_size=batch_size)Get the cats and dogs image and label data that lives in our training and validation folders, and store them as batches in two variables called batch_training and batch_valid.

vgg.finetune(batch_training)Take our copy of the VGG-16 model and finetune it so it predicts just the probability of an image containing either a cat or a dog, instead of the probability that the image contains an object belonging to one of the categories VGG-16 was initially trained on.

This is the function we're going to explore today.

vgg.fit(batch_training, batch_valid, nb_epoch=1)Fit our copy of the VGG-16 model against the data in our training set, and validate that model against the data in our validation set.

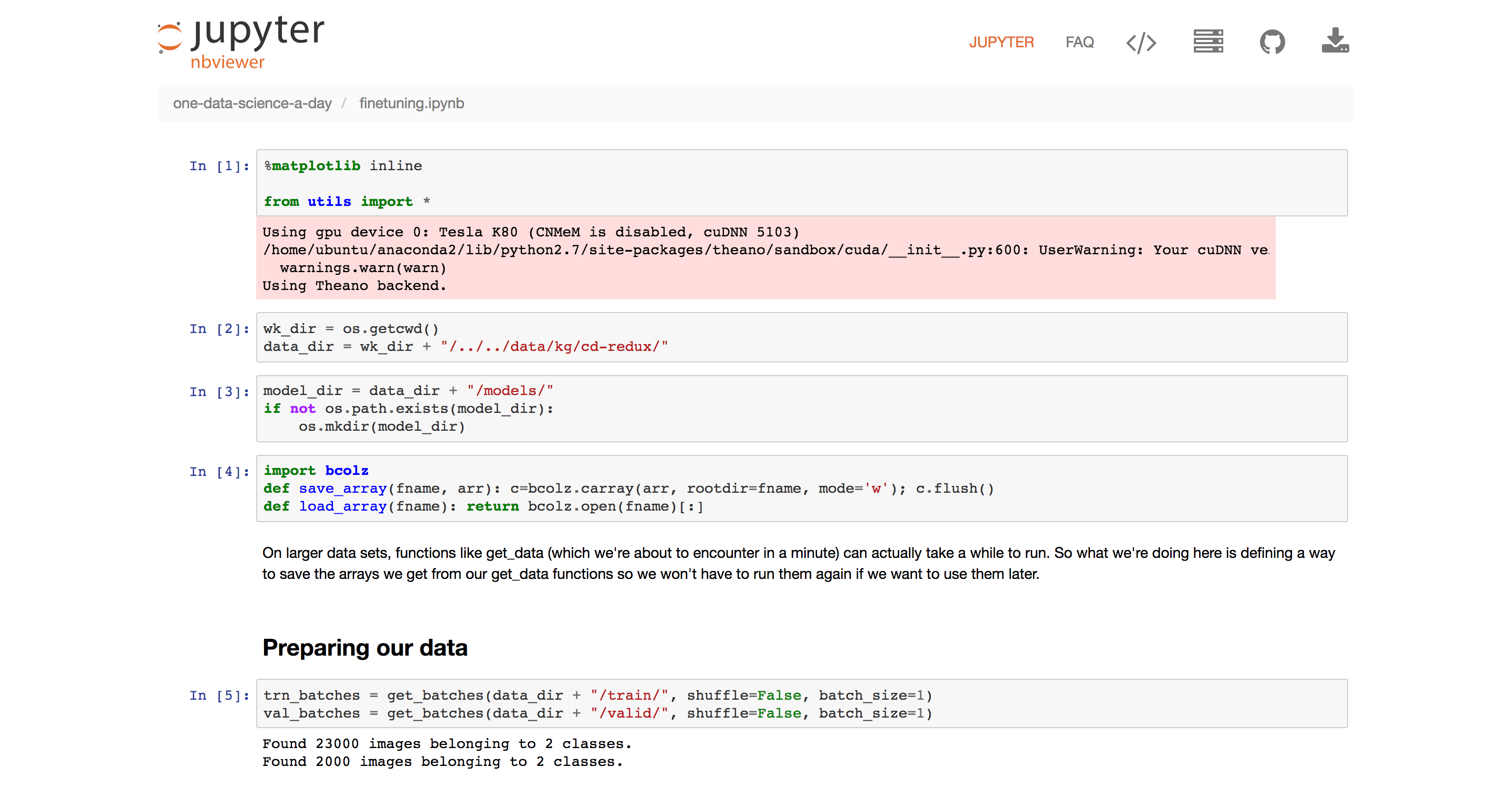

Just like when we did our Keras linear model, the rest of this post is in a notebook. I think a lot of posts are going to be in notebooks from here on out.