Day Sixteen

The day I published Day Fifteen I ended up working on the post for about nine hours in a row and I think it kind of damaged me.

In the time since it definitely feels like my learning has outpaced my writing by quite a bit. I have a list of things I understand a little better now, but haven't had the chance to write about yet:

- Dense/fully-connected layers

- Convolutions and convolutional layers

- Activations - ReLU, Softmax

- Normalization

- Data augmentation

- Dropout

- Ensemble models

And some things I'll need to understand a little better before I can write about:

- Gradient descent

- Backpropagation

- Pre-computing

But apparently once we're done with all this we can wrap up the topic of convolutional neural networks because this isn't even the only type of neural network.

I've been working on the State Farm Distracted Driver Detection dataset this weekend, which has also been a source of some grief.

But I think I've got it almost figured out.

THREE WEEKS LATER

I didn't have it almost figured out.

Even trying to parse the State Farm data consistently results in a disconnected kernel, which sounds painful but is something I know absolutely nothing about and so far have been unable to fix.

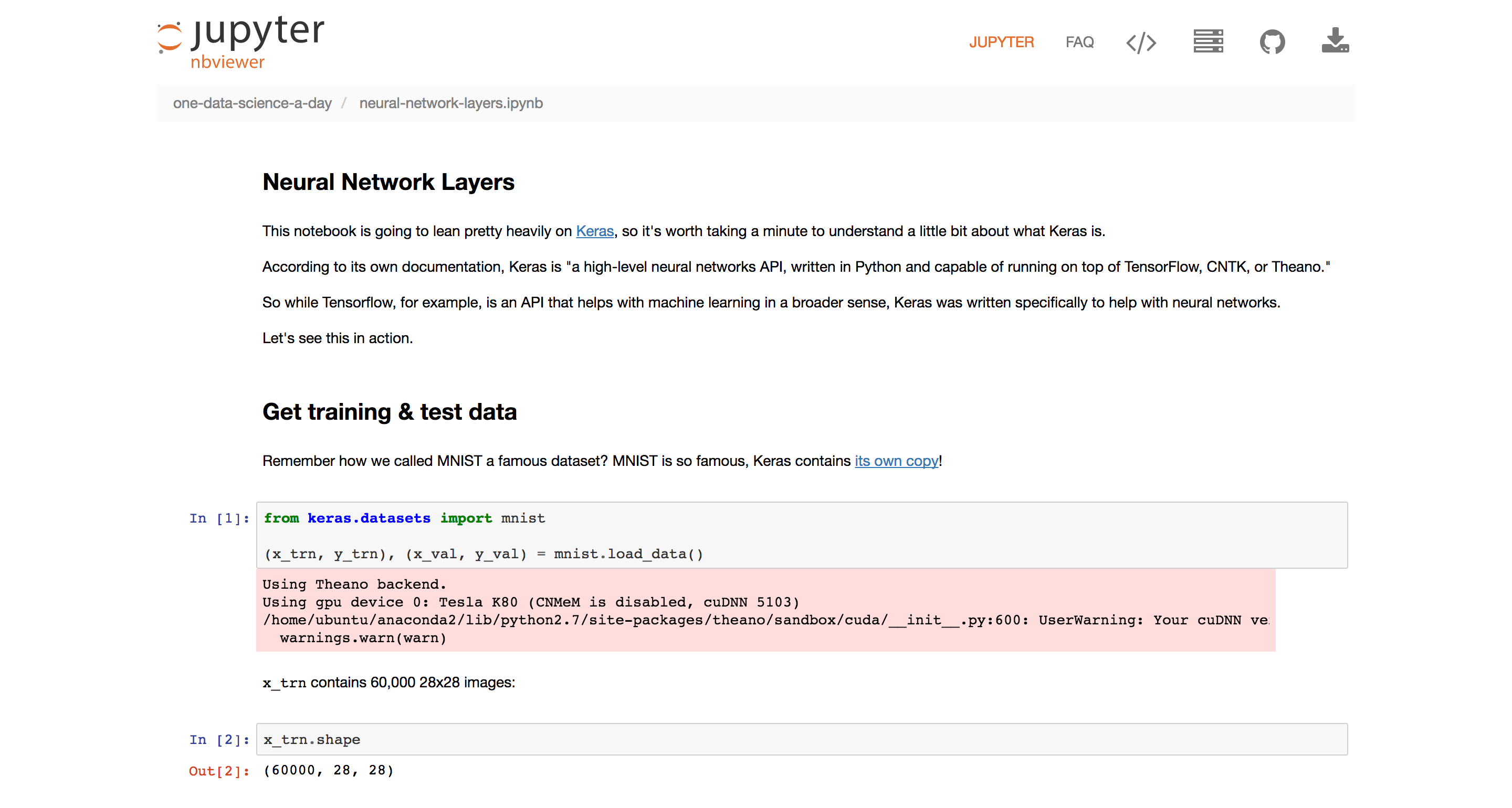

So let's forget about State Farm for now and turn our attention to neural network layers and the MNIST data set.

MNIST

Did you know that there are famous datasets? Well, there are famous datasets. ImageNet (the dataset VGG was trained on) is one of them. This Quartz piece calls ImageNet "[the dataset] that transformed AI research" and is well worth reading.

Another famous dataset is MNIST, a database of 70,000 handwritten numbers (from 0 to 9) used in countless machine learning introductory courses.

What's so great about MNIST? Well, it's lightweight, for one. The MNIST images are small (28x28 pixels in size) and in black and white. Remember the shape of a single image from our cats and dogs dataset?

(3, 224, 224)Each of the three color channels (R, G, B) forms its own layer with 224x224 pixel values. Because a pixel in an MNIST image can only be black or white, it bypasses this foolishness altogether:

(28, 28)MNIST also has a well-publicized global leaderboard that we can use to benchmark the performance of our own models. At the time of this writing, the top-ranked method was "Regularization of Neural Networks using DropConnect", with an error of 0.21%.

The dataset was created by Yann LeCun (now at Facebook), Corinna Cortes (Google), and Christopher Burges (Microsoft). Alright! Let's use MNIST to better understand the layers in a neural network.

The following snippet contains a preview of the different layers (Lambda, ZeroPadding, Convolution, MaxPooling, Flatten, Dense) we're going to cover...

model = Sequential([

Lambda(mnist_normalize, input_shape=(1,28,28)),

ZeroPadding2D(),

Convolution2D(32, 3, 3, activation="relu"),

ZeroPadding2D(),

Convolution2D(32, 3, 3, activation="relu"),

MaxPooling2D(),

ZeroPadding2D(),

Convolution2D(64, 3, 3, activation="relu"),

ZeroPadding2D(),

Convolution2D(64, 3, 3, activation="relu"),

MaxPooling2D(),

Flatten(),

Dense(512, activation='relu'),

Dense(10, activation='softmax')

])... But the rest of the post itself is going to be (you guessed it) in a notebook.