Day Seven

Yesterday, I made it into the top 33% of the Kaggle leaderboard for the Dogs vs. Cats competition. I think I can do better.

Running a few more epochs and messing around with the model's learning rate gives me a new top score of 0.07407, bumping me up more than 200 spots to 205th place. What's an epoch? Do I have any idea what I just did to basically double the performance of my model in a single day? No. But that's why I'm here to learn!

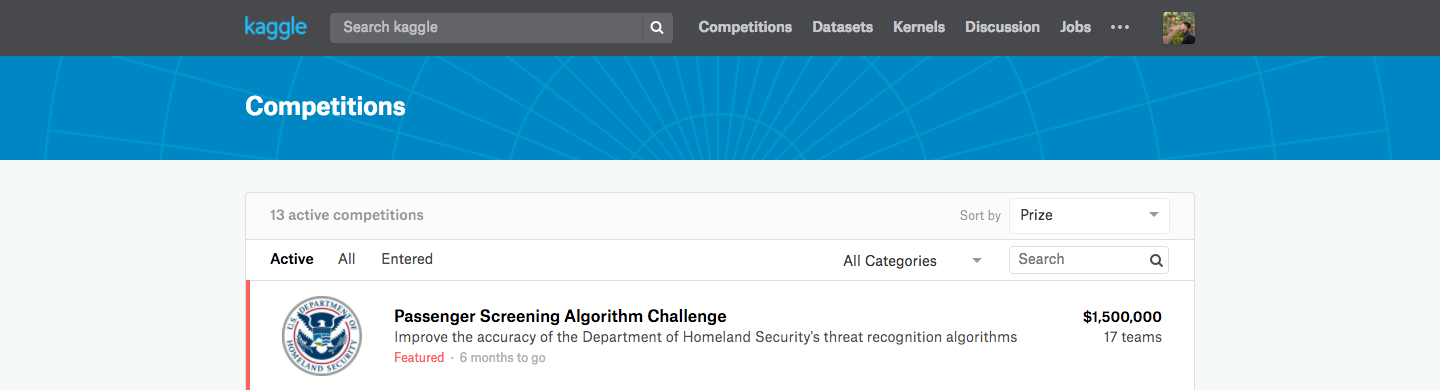

I head back to Kaggle in search of a different data set to practice on. The competition at the very top of the homepage looks... Interesting.

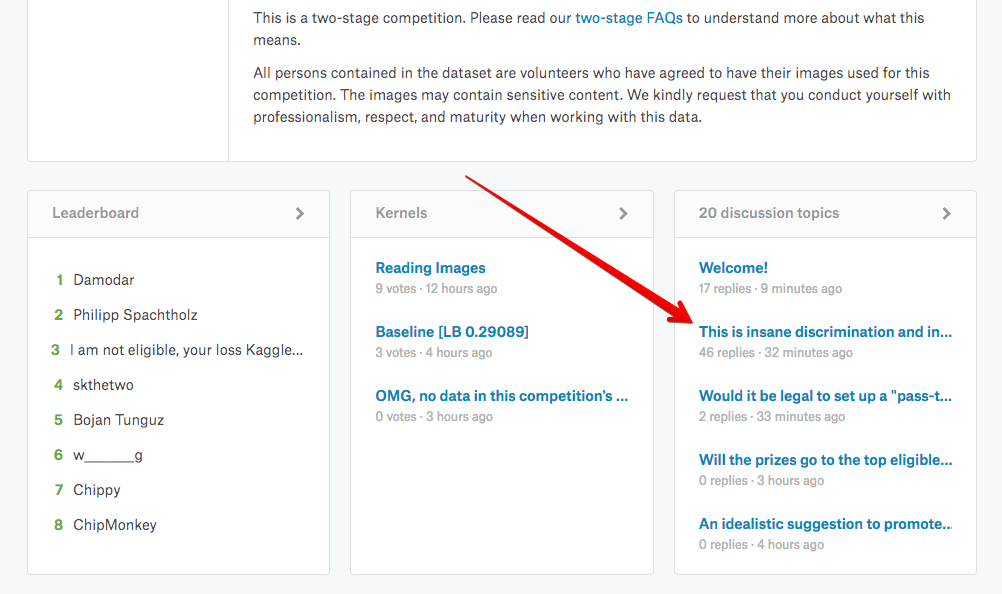

The prize is a million dollars? Time to quit my job and start buying up GPUs on - wait.

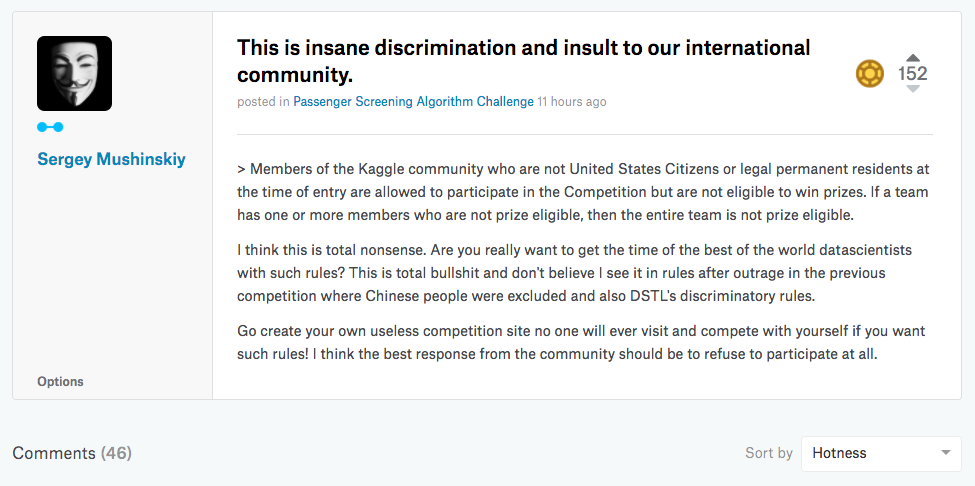

Oh.

Foiled again by the TSA.

Well, the competition might be a representation of everything I think is wrong with the world, but at least I can still practice on the data.

The data for stage one is more than three terabytes in size.

Never mind.

I ended up finding a nice little competition where I get to identify a species of hydrangea instead. It takes two hours but I get a rank of 134, which puts me in the... 62nd percentile? Outrageous.

One of the uploaded notebooks is called "use Keras pre-trained VGG16 acc 98%". Okay that's exactly what I did, only I got an accuracy of 95%. What does this person know that I don't?

Checking their notebook, the answer seems to be "quite a lot". Something that jumps out immediately is the number of epochs run. I ran 5, and thought that was pretty intense. This person ran 50.

Hmm.